As in previous cycles I’ve updated some of the Nova specific dashboards available within the excellent gerrit-dash-creator project and started using them prior to dropping offline on paternity.

I’d really like to see more use of these dashboards within Nova to help focus our limited review bandwidth on active and mergeable changes so if you do have any ideas please fire off reviews and add me in!

For now I’ve linked to some of the dashboards I’ve been using most often below

with a brief summary and dump of the current .dash logic used by the

gerrit-dash-creator tooling to build the Gerrit dashboard URLs.

nova-specs

The openstack/nova-specs repo contains Nova design

specifications associated

with both the previous and current development

release.

This dashboard specifically targets the current development release as we

should only see reviews landing in gerrit referring to this release at present.

[dashboard]

title = Nova Specs - Victoria

description = Review Inbox

foreach = project:openstack/nova-specs status:open NOT label:Workflow<=-1 branch:master NOT owner:self

[section "You are a reviewer, but haven't voted in the current revision"]

query = file:^specs/victoria/.* NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self label:Verified>=1,zuul

[section "Not blocked by -2s"]

query = file:^specs/victoria/.* NOT label:Code-Review<=-2 NOT label:Code-Review>=2 NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self label:Verified>=1,zuul

[section "No votes and spec is > 1 week old"]

query = file:^specs/victoria/.* NOT label:Code-Review>=-2 age:7d label:Verified>=1,zuul

[section "Needs final +2"]

query = file:^specs/victoria/.* label:Code-Review>=2 NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self label:Verified>=1,zuul NOT label:workflow>=1

[section "Broken Specs (doesn't pass Zuul)"]

query = file:^specs/victoria/.* label:Verified<=-1,zuul

[section "Dead Specs (blocked by a -2)"]

query = file:^specs/victoria/.* label:Code-Review<=-2

[section "Dead Specs (Not Proposed for Victoria)"]

query = NOT file:^specs/victoria/.* file:^specs/.*

[section "Not Specs (tox.ini etc)"]

query = NOT file:^specs/.*

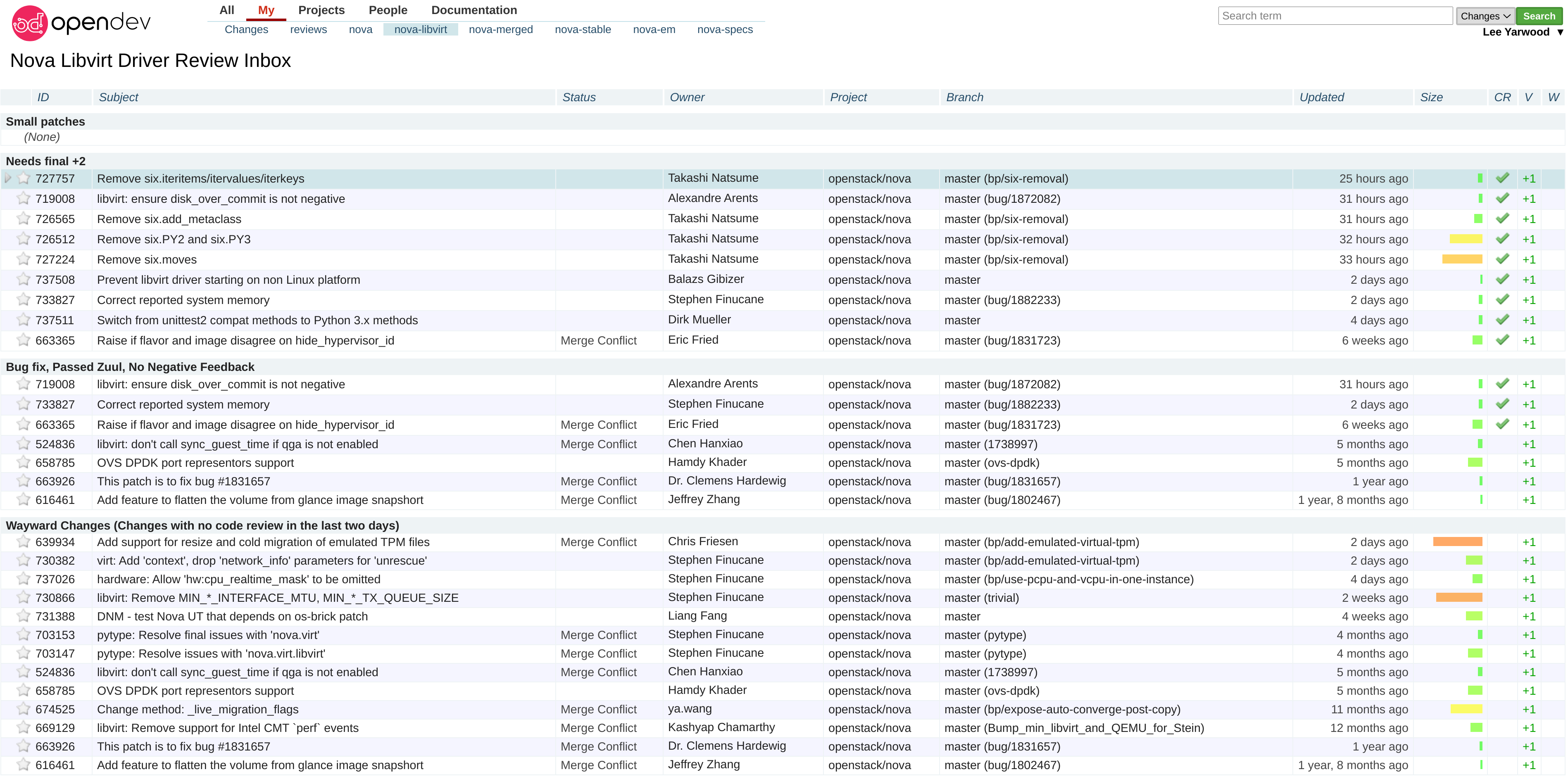

nova-libvirt

I introduced this dashboard after the

creation of the libvirt

subteam recently during

the U cycle. As you can see from the foreach filter the dashboard only

lists changes touching the standard set of libvirt driver related files within

the openstack/nova codebase. IMHO I think a dashboard for non-libvirt

drivers would also be useful.

[dashboard]

title = Nova Libvirt Driver Review Inbox

description = Review Inbox for the Nova Libvirt Driver

foreach = project:openstack/nova

status:open

NOT owner:self

NOT label:Workflow<=-1

label:Verified>=1,zuul

NOT reviewedby:self

branch:master

(file:^nova/virt/libvirt/.* OR file:^nova/tests/unit/libvirt/.* OR file:^nova/tests/functional/libvirt/.*)

[section "Small patches"]

query = NOT label:Code-Review>=2,self NOT label:Code-Review<=-1,nova-core NOT message:"DNM" delta:<=10

[section "Needs final +2"]

query = NOT label:Code-Review>=2,self label:Code-Review>=2 limit:50 NOT label:workflow>=1

[section "Bug fix, Passed Zuul, No Negative Feedback"]

query = NOT label:Code-Review>=2,self NOT label:Code-Review<=-1,nova-core message:"bug: " limit:50

[section "Wayward Changes (Changes with no code review in the last two days)"]

query = NOT label:Code-Review<=-1 NOT label:Code-Review>=1 age:2d limit:50

[section "Needs feedback (Changes older than 5 days that have not been reviewed by anyone)"]

query = NOT label:Code-Review<=-1 NOT label:Code-Review>=1 age:5d limit:50

[section "Passed Zuul, No Negative Feedback"]

query = NOT label:Code-Review>=2 NOT label:Code-Review<=-1 limit:50

[section "Needs revisit (You were a reviewer but haven't voted in the current revision)"]

query = reviewer:self limit:50

nova-stable

I have been a Nova Stable Core for a few years now and during the time I have relied heavily on Gerrit dashboards and queries to help keep track of changes as they move through our many stable branches. This has been made slightly more complex by the introduction of extended-maintenance branches but more on that below. For now this dashboard covers the ussuri, train and stein stable branches.

I’m currently using the by branch Nova stable dashboards as these allow me to track changes through each required branch easily without any additional clicking within Gerrit. There is however an allinone dashboard if you prefer that approach.

Finally, for anyone paying attention you might have noticed I’m also using a nova-merged query in Gerrit to track recently merged changes into master. This has helped me catch and proactively backport useful fixes to stable many times.

[dashboard]

title = Nova Stable Maintenance Review Inbox

description = Review Inbox

foreach = (project:openstack/nova OR project:openstack/python-novaclient) status:open NOT owner:self NOT label:Workflow<=-1 label:Verified>=1,zuul NOT reviewedby:self

[section " stable/ussuri You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/ussuri

[section "stable/ussuri Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/ussuri

[section "stable/ussuri Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/ussuri

[section " stable/train You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/train

[section "stable/train Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/train

[section "stable/train Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/train

[section " stable/stein You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/stein

[section "stable/stein Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/stein

[section "stable/stein Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/stein

nova-em

In addition to the nova-stable dashboard above I also have a dashboard for our extended-maintenance branches. At present these are (or are about to be) rocky, queens and pike.

[dashboard]

title = Nova Extended Maintenance Review Inbox

description = Review Inbox

foreach = (project:openstack/nova OR project:openstack/python-novaclient) status:open NOT owner:self NOT label:Workflow<=-1 label:Verified>=1,zuul NOT reviewedby:self

[section " stable/rocky You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/rocky

[section "stable/rocky Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/rocky

[section "stable/rocky Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/rocky

[section " stable/queens You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/queens

[section "stable/queens Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/queens

[section "stable/queens Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/queens

[section " stable/pike You are a reviewer, but haven't voted in the current revision"]

query = NOT label:Code-Review<=-1,self NOT label:Code-Review>=1,self reviewer:self branch:stable/pike

[section "stable/pike Needs final +2"]

query = label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 NOT label:workflow>=1 branch:stable/pike

[section "stable/pike Passed Zuul, No Negative Core Feedback"]

query = NOT label:Code-Review>=2 NOT(reviewerin:stable-maint-core label:Code-Review<=-1) limit:50 branch:stable/pike