Welcome to part #4 of this series following the development of instance types and preferences within KubeVirt!

What’s new

inferFromVolume

This feature has now landed in full within KubeVirt with some subtle changes:

https://github.com/kubevirt/kubevirt/pull/8480

The previously discussed annotations have been replaced by labels to allow users (such as the downstream OpenShift UI within Red Hat) to use server side filtering to find suitably decorated resources within a given cluster.

$ env | grep KUBEVIRT

KUBEVIRT_PROVIDER=k8s-1.24

KUBEVIRT_MEMORY=16384

KUBEVIRT_STORAGE=rook-ceph-default

[..]

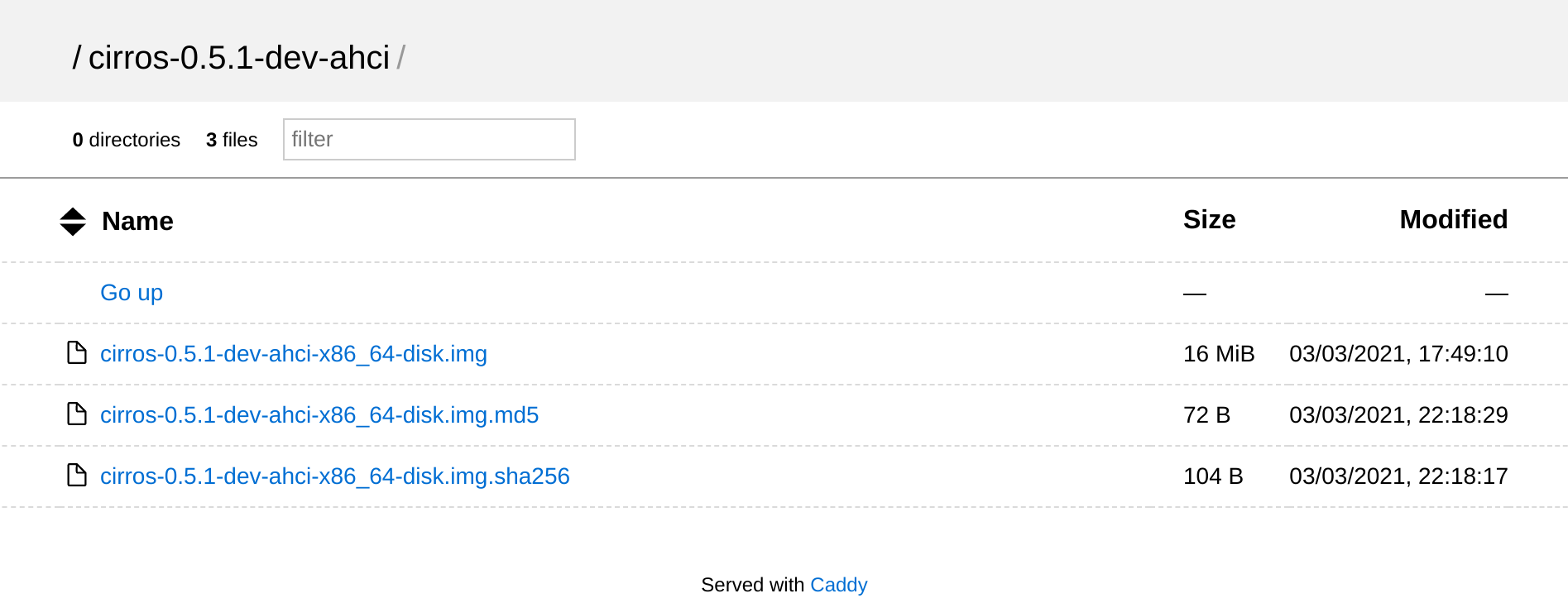

$ wget https://github.com/cirros-dev/cirros/releases/download/0.6.1/cirros-0.6.1-x86_64-disk.img

[..]

$ ./cluster-up/virtctl.sh image-upload pvc cirros --size=1Gi --image-path=./cirros-0.6.1-x86_64-disk.img

[..]

$ ./cluster-up/kubectl.sh kustomize https://github.com/kubevirt/common-instancetypes.git | ./cluster-up/kubectl.sh apply -f -

[..]

$ ./cluster-up/kubectl.sh label pvc/cirros instancetype.kubevirt.io/default-instancetype=server.tiny instancetype.kubevirt.io/default-preference=cirros

$ ./cluster-up/kubectl.sh get pvc/cirros -o json | jq .metadata.labels

selecting docker as container runtime

{

"instancetype.kubevirt.io/default-instancetype": "server.tiny",

"instancetype.kubevirt.io/default-preference": "cirros"

}

[..]

$ ./cluster-up/kubectl.sh apply -f - << EOF

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: cirros

spec:

instancetype:

inferFromVolume: cirros-disk

preference:

inferFromVolume: cirros-disk

running: true

template:

spec:

domain:

devices: {}

volumes:

- persistentVolumeClaim:

claimName: cirros

name: cirros-disk

EOF

[..]

$ ./cluster-up/kubectl.sh get vms/cirros -o json | jq '.spec.instancetype, .spec.preference'

selecting docker as container runtime

{

"kind": "virtualmachineclusterinstancetype",

"name": "server.tiny",

"revisionName": "cirros-server.tiny-ef0cbfb6-b48c-4e9f-aa7a-a06878b42503-1"

}

{

"kind": "virtualmachineclusterpreference",

"name": "cirros",

"revisionName": "cirros-cirros-5bddae5d-47f8-433b-afa2-d4f846ef1830-1"

}

Changes have also been made to the CDI project ensuring these labels are passed down when importing volumes into an environment using the DataImportCron resource. Any DataVolumes, DataSources or PVCs created by this process will have these labels copied over from the initial DataImportCron. The following example is from an environment where the SSP operator has deployed a labelled DataImportCron to CDI:

$ kubectl get all,pvc -A -l instancetype.kubevirt.io/default-preference

NAMESPACE NAME AGE

kubevirt-os-images datasource.cdi.kubevirt.io/centos-stream8 31m

NAMESPACE NAME AGE

kubevirt-os-images dataimportcron.cdi.kubevirt.io/centos-stream8-image-cron 4m29s

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

kubevirt-os-images persistentvolumeclaim/centos-stream8-2f16c067b974 Bound pvc-4be6ea30-9d7d-480a-828c-38fa2abc6597 10Gi RWX rook-ceph-block 4m19s

$ kubectl get persistentvolumeclaim/centos-stream8-2f16c067b974 -n kubevirt-os-images -o json | jq .metadata.labels

{

"app": "containerized-data-importer",

"app.kubernetes.io/component": "storage",

"app.kubernetes.io/managed-by": "cdi-controller",

"cdi.kubevirt.io/dataImportCron": "centos-stream8-image-cron",

"instancetype.kubevirt.io/default-instancetype": "server.medium",

"instancetype.kubevirt.io/default-preference": "centos.8.stream"

}

I plan on recording and posting an updated demo shortly.

PrefferredStorageClassName

A new PrefferredStorageClassName preference has been added:

https://github.com/kubevirt/kubevirt/pull/8802

common-instancetypes

The common-instancetypes project has moved under the kubevirt namespace and had a number of rc releases:

https://github.com/kubevirt/common-instancetypes/releases

Recent changes include the introduction of new instancetypes, preferences and various bits of house-keeping.

The VirtualMachineCluster{Instancetype,Preference} resources are now also deployed by the SSP operator by default:

https://github.com/kubevirt/ssp-operator/pull/453

$ kubectl get all -A -l app.kubernetes.io/name=common-instancetypes

NAMESPACE NAME AGE

virtualmachineclusterpreference.instancetype.kubevirt.io/alpine 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.7 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.7.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.8.stream 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.8.stream.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.9.stream 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/centos.9.stream.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/cirros 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/fedora 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.7 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.7.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.8 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.8.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.9 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/rhel.9.desktop 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/ubuntu 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.10 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.10.virtio 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.11 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.11.virtio 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k12 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k12.virtio 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k16 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k16.virtio 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k19 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k19.virtio 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k22 6h17m

virtualmachineclusterpreference.instancetype.kubevirt.io/windows.2k22.virtio 6h17m

NAMESPACE NAME AGE

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.2xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.4xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.8xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.large 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.medium 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/cx1.xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/gn1.2xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/gn1.4xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/gn1.8xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/gn1.xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/highperformance.large 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/highperformance.medium 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/highperformance.small 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/m1.2xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/m1.4xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/m1.8xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/m1.large 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/m1.xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.2xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.4xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.8xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.large 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.medium 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/n1.xlarge 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/server.large 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/server.medium 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/server.micro 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/server.small 6h17m

virtualmachineclusterinstancetype.instancetype.kubevirt.io/server.tiny 6h17m

virtctl create vm

A new virtctl command has been introduced that generates a VirtualMachine definition:

https://github.com/kubevirt/kubevirt/pull/8878

This includes basic support for Instance types and Preferences with support for InferFromVolume hopefully landing in the near future:

$ virtctl create vm --instancetype foo --preference bar --running --volume-clone-ds=example/datasource --name test

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

creationTimestamp: null

name: test

spec:

dataVolumeTemplates:

- metadata:

creationTimestamp: null

name: test-ds-datasource

spec:

sourceRef:

kind: DataSource

name: datasource

namespace: example

storage:

resources: {}

instancetype:

name: foo

preference:

name: bar

running: true

template:

metadata:

creationTimestamp: null

spec:

domain:

devices: {}

resources: {}

terminationGracePeriodSeconds: 180

volumes:

- dataVolume:

name: test-ds-datasource

name: test-ds-datasource

status: {}

What’s coming next

Support for Resource Requests

Work is under way to add resource requests to Instance types:

https://github.com/kubevirt/kubevirt/pull/8729

This will close out a previous gap with Instance types and allow us to use the currently blocked dedicatedCPUPlacement feature again that requires the use of resource requests.

v1alpha3

The introduction of resource requests and possible move to make the guest visible resource requests optional has prompted us to look at introducing yet another alpha version of the API:

https://github.com/kubevirt/kubevirt/pull/9052

The logic being that we can’t make part of the API optional without moving to a new version and we can’t move to v1beta1 while making changes to the API. This version should remain backwardly compatible with the older versions but work is still required to see if a conversion strategy is required for stored objects both in etcd and in ControllerRevisions.

<=v1alpha2 Deprecation

With the introduction of a new API version I also want to start looking into what it will take to deprecate our older versions while we are still in alpha:

https://github.com/kubevirt/kubevirt/issues/9051

This issue sets out the following tasks to be investigated:

- [ ] Introduce a new v1alpha3 version ahead of backwardly incompatible changes landing

- [ ] Deprecate v1alpha1 and v1alpha2 versions

- [ ] Implement a conversion strategy for stored objects from v1alpha1 and v1alpha2

- [ ] Implement a conversion strategy for objects stored in ControllerRevisions associated with existing VirtualMachines

This work could well be differed until after v1beta1 but it’s still a useful mental exercise to plan out what will eventually be required.

Preference Resource Requirements

A while ago I quickly drafted an idea around expressing the resource requirements of a workload within VirtualMachinePreferenceSpec:

https://github.com/kubevirt/kubevirt/pull/8780

The PR is still pretty rough but the demo text included sets out what I’d like to achieve with the feature eventually. The general idea being to ensure that an Instance type or raw VirtualMachine definition using a given Preference provides the required resources to run a given workload correctly.

$ ./cluster-up/kubectl.sh apply -f https://raw.githubusercontent.com/kubevirt/common-instancetypes/main/common-instancetypes-all-bundle.yaml

[..]

$ ./cluster-up/kubectl.sh get VirtualMachinePreference cirros -o json | jq .spec

selecting docker as container runtime

{

"devices": {

"preferredDiskBus": "virtio",

"preferredInterfaceModel": "virtio"

}

}

$ ./cluster-up/kubectl.sh patch VirtualMachinePreference cirros --type=json -p='[{"op": "add", "path": "/spec/requirements", "value": {"cpu":{"guest": 2}}}]'

$ ./cluster-up/kubectl.sh get VirtualMachinePreference cirros -o json | jq .spec

selecting docker as container runtime

{

"devices": {

"preferredDiskBus": "virtio",

"preferredInterfaceModel": "virtio"

},

"requirements": {

"cpu": {

"guest": 2

}

}

}

$ ./cluster-up/kubectl.sh get virtualmachineinstancetype server.tiny -o json | jq .spec

selecting docker as container runtime

{

"cpu": {

"guest": 1

},

"memory": {

"guest": "1.5Gi"

}

}

$ ./cluster-up/kubectl.sh apply -f - << EOF

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: preference-requirements-demo

spec:

instancetype:

name: server.tiny

kind: virtualmachineinstancetype

preference:

name: cirros

kind: virtualmachinepreference

running: false

template:

spec:

domain:

devices: {}

volumes:

- containerDisk:

image: registry:5000/kubevirt/cirros-container-disk-demo:devel

name: containerdisk

EOF

The request is invalid: spec.instancetype: Failure checking preference requirements: Insufficient CPU resources of 1 vCPU provided by instance type, preference requires 2 vCPU

$ ./cluster-up/kubectl.sh apply -f - << EOF

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: preference-requirements-demo

spec:

preference:

name: cirros

kind: virtualmachinepreference

running: false

template:

spec:

domain:

cpu:

sockets: 1

devices: {}

volumes:

- containerDisk:

image: registry:5000/kubevirt/cirros-container-disk-demo:devel

name: containerdisk

EOF

The request is invalid: spec.template.spec.domain.cpu: Failure checking preference requirements: Insufficient CPU resources of 1 vCPU provided by VirtualMachine, preference requires 2 vCPU

$ ./cluster-up/kubectl.sh get virtualmachineinstancetype server.large -o json | jq .spec

selecting docker as container runtime

{

"cpu": {

"guest": 2

},

"memory": {

"guest": "8Gi"

}

}

$ ./cluster-up/kubectl.sh apply -f - << EOF

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: preference-requirements-demo

spec:

instancetype:

name: server.large

kind: virtualmachineinstancetype

preference:

name: cirros

kind: virtualmachinepreference

running: false

template:

spec:

domain:

devices: {}

volumes:

- containerDisk:

image: registry:5000/kubevirt/cirros-container-disk-demo:devel

name: containerdisk

EOF

virtualmachine.kubevirt.io/preference-requirements-demo created